I recently gave a talk at the conference of the Metabolomics society. After the talk, Oliver Fiehn asked, “when will we finally have FDR estimation in metabolite annotation?” This is a good question: False Discovery Rates (FDR) and FDR estimation have been tremendously helpful in genomics, transcriptomics and proteomics. In particular, FDRs have been extremely helpful for peptide annotation in shotgun proteomics. Hence, when will FDR estimation finally become a reality for metabolite annotation from tandem mass spectrometry (MS/MS) data? After all, the experimental setup of shotgun proteomics and untargeted metabolomics using Liquid Chromatography (LC) for separation, are highly similar. By the way, if I talk about metabolites below, this is meant to also include other small molecules of biological interest. From the computational method standpoint, they are indistinguishable.

My answer to this question was one word: “Never.” I was about to give a detailed explanation for this one-word answer, but before I could say a second word, the session chair said, “it is always good to close the session on a high note, so let us stop here!” and ended the session. (Well played, Tim Ebbels.) But this is not how I wanted to answer this question! In fact, I have been thinking about this question for several years now; as noted above, I believe that it is an excellent question. Hence, it might be time share my thoughts on the topic. Happy to hear yours! I will start off with the basics; if you are familiar with the concepts, you can jump to the last five paragraphs of this text.

The question that we want to answer is as follows: We are given a bag of metabolite annotations from an LC-MS/MS run. For every query spectrum, this is the best fit (best-scoring candidate) from the searched database, and will be called “hit” in the following. Can we estimate the fraction of hits that are incorrect? Can we do so for a subset of hits? More precisely, we will sort annotations by some score, such as the cosine similarity for spectral library search. If we only accept those hits with score above (or below, it does not matter) a certain threshold, can we estimate the ratio of incorrect hits for this particular subset? In practice, the user selects an arbitrary FDR threshold (say, one percent), and we then find the smallest score threshold so that the hits with score above the score threshold have an estimated FDR below or equal to the FDR threshold. (Yes, there are two thresholds, but we can handle that.)

Let us start with the basic definition. For a given set of annotations, the False Discovery Rate (FDR) is the number of incorrect annotations, divided by the total number of annotations. FDR is usually recorded as a percentage. To compute FDR, you have to have complete knowledge; you can only compute it if you know upfront which annotations are correct and which are incorrect. Sad but true. This value is often referred to as “exact FDR” or “true FDR”, to distinguish it from the estimates we want to determine below. Obviously, you can compute exact FDR for metabolite annotations, too; the downside is that you need complete knowledge. Hence, this insight is basically useless, unless your are some demigod or all-knowing demon. For us puny humans, we do not know upfront which annotations are correct and which are incorrect. The whole concept of FDR and FDR estimation would be useless if we knew: If we knew, we could simply discard the incorrect hits and continue to work with the correct ones.

To this end, a method for FDR estimation tries to estimate the FDR of a given set of annotations, without having complete knowledge. It is important to understand the part that says, “tries to estimate”. Just because a method claims it is estimating FDR, does not mean it is doing a good job, or even anything useful. For example, consider a random number generator that outputs random values between 0 and 1: This IS an FDR estimation method. It is neither accurate nor useful, but that is not required in the definition. Also, a method for FDR estimation may always output the same, fixed number (say, always-0 or always-1). Again, this is a method for FDR estimation; again, it is neither accurate nor useful. Hence, be careful with papers that claim to introduce an FDR estimation method, but fail to demonstrate that these estimates are accurate or at least useful.

But how can we demonstrate that a method for FDR estimation is accurate or useful? Doing so is somewhat involved because FDR estimates are statistical measures, and in theory, we can only ask if they are accurate for the expected value of the estimate. Yet, if someone presents a novel methods for FDR estimation, the minimum to ask for is a series of q-value plots that compare estimated q-values and exact q-values: A q-value is the smallest FDR for which a particular hit is part of the output. Also, you might want to see the distribution of p-values, which should be uniform. You probably know p-values; if you can estimate FDR, chances are high that you can also estimate p-values. Both evaluations should be done for multiple datasets, to deal with the stochastic nature of FDR estimation. Also, since you have to compute exact FDRs, the evaluation must be done for reference datasets where the true answer is known. Do not confuse “the true answer is known” and “the true answer is known to the method“; obviously, we do not tell our method for FDR estimation what the true answer is. If a paper introduces “a method for FDR estimation” but fails to present convincing evaluations that the estimated FDRs are accurate or at least useful, then you should be extremely careful.

Now, how does FDR estimation work in practice? In the following, I will concentrate on shotgun proteomics and peptide annotation, because this task is most similar to metabolomics. There, target-decoy methods have been tremendously successful: You transform the original database you search in (called target database in the following) into a second database that contains only candidates that are incorrect. This is the decoy database The trick is to make the candidates from the decoy database “indistinguishable” from those in the target database, as further described below. In shotgun proteomics, it is surprisingly easy to generate a useful decoy database: For every peptide in the target database, you generate a peptide in the decoy database for which you read the amino acid sequence from back to front. (In more detail, you leave the last amino acid of the peptide untouched, for reasons that are beyond what I want to discuss here.)

To serve its purpose, a decoy database must fulfill three conditions: (i) There must be no overlap between target and decoy database; (ii) all candidates from the decoy database must never be the correct answer; and (iii), false hits in the target database have the same probability to show up as (always false) hits from the decoy database. For (i) and (ii), we can be relatively relaxed: We can interpret “no overlap” as “no substantial overlap”. This will introduce a tiny error in our FDR estimation, which is presumably irrelevant in comparison to the error that is an inevitable part of FDR estimation. For (ii), this means that whenever a search with a query spectrum returns a candidate from the target database, this is definitely not the correct answer. The most important condition is (iii), and if we look more precisely, we will even notice that we have to demand more: That is, the score distribution of false hits from the target database is identical to the score distribution of hits from the decoy database. If our decoy database fulfills all three conditions, then we can use a method such as Target-Decoy Competition and utilize hits from the decoy database to estimate the ration of incorrect hits from the target database. Very elegant in its simplicity.

Enough of the details, let us talk about untargeted metabolomics! Can we generate a decoy database that fulfills the three requirements? Well — it is difficult, and you might think that this is why I argue that FDR estimation is impossible here: Looks like we need a method to produce metabolite structure that look like true metabolites (or, more generally, small molecules of biological interest — it does not matter). Wow, that is already hard — we cannot simply use random molecular structure, because they will not look like the real thing, see (iii). In fact, a lot of research is currently thrown at this problem, as it would potentially allow us to find the next super-drug. Also, how could we guarantee not to accidentally generate a true metabolite, see (ii)? Next, looks like we need a method to simulate a mass spectrum for a given (arbitrary) molecular structure. Oopsie daisies, that is also pretty hard! Again, ongoing research, far from being solved, loads of papers, even a NeurIPS competition coming up.

So, are these the problems we have to solve to do FDR estimation, and since we cannot do those, we also cannot do FDR? In other words, if — in a decade or so — we would finally have a method that can produce decoy molecular structures, and another method that simulates high-quality mass spectra, would we have FDR estimation? Unfortunately, the answer is, No. In fact, we do not even need those methods: In 2017, my lab developed a computational method that transforms a target spectral library into a decoy spectral library, completely avoiding the nasty pitfalls of generating decoy structures and simulating mass spectra. Also, other computational methods (say, naive Bayes) completely avoid generating a decoy database.

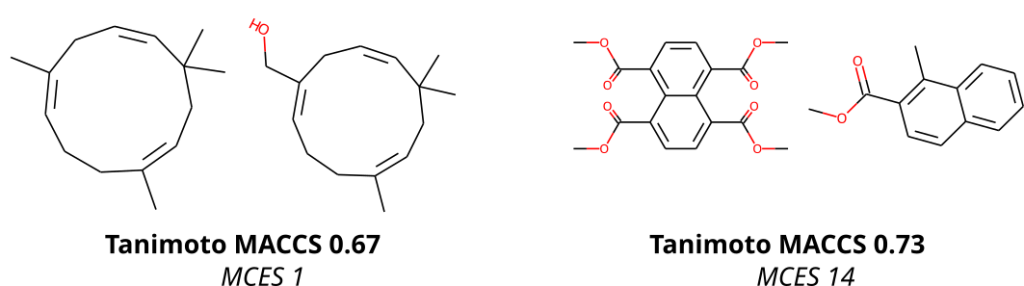

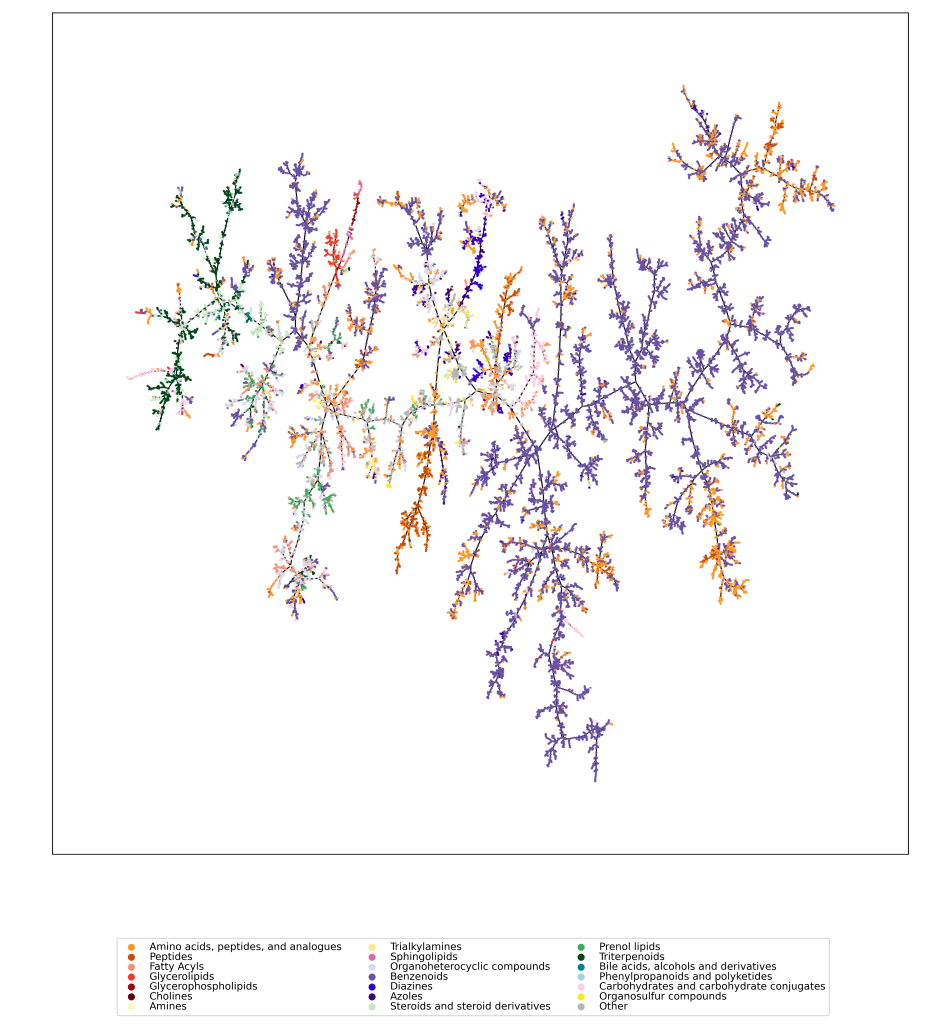

The true problem is that we are trying to solve a non-statistical problem with a statistical measure, and that is not going to work, no matter how much we think about the problem. I repeat the most important sentence from above: “False hits in the target database have the same probability to show up as hits from the decoy database.” This sentence, and all stochastic procedure for FDR estimation, assume that a false hit in the target database is something random. In shotgun proteomics, this is a reasonable assumption: The inverted peptides we used as decoys basically look random, and so do all false hits. The space of possible peptides is massive, and the target peptides lie almost perfectly separated in this ocean of non-occurring peptides. Biological peptides are sparse and well-separated, so to say. But this is not the case for metabolomics and other molecules of biological interest. If an organism has learned how to make a particular compound, it will often also be able to synthesize numerous compounds that are structurally extremely similar. A single hydrogen replaced by a hydroxy group, or vice versa. A hydroxy group “moving by a single carbon atom”. Everybody who has ever looked at small molecules, will have noticed that. Organisms invest a lot of energy to make proteins for exactly this purpose. But this is not only a single organism; the same happens in biological communities, such as the microbiota in our intestines or on our skin. It even happens when no organisms are around. In short: No metabolite is an island.

Sadly, this is the end of the story. Let us assume that we have identified small molecule A for a query MS/MS when in truth, the answer should be B. In untargeted metabolomics and related fields, this usually means that A and B are structurally highly similar, maybe to the point of a “moving hydroxy group”. Both A and B are valid metabolites. There is nothing random or stochastic about this incorrect annotation. Maybe, the correct answer B was not even in the database we searched in; potentially, because it is a “novel metabolite” currently not known to mankind. Alternatively, both compounds were in the database, and our scoring function for deciding on the best candidate simply did not return the correct answer. This will happen, inevitably: Otherwise, you again need a demigod or demon to build the scoring function. Consequently, speaking about the probability of an incorrect hit in the target database, cannot catch the non-random part of such incorrect hits. There is no way to come up with an FDR estimation method that is accurate, because the process itself is not stochastic. Maybe, some statisticians will develop a better solution some day, but I argue that there is no way to ever “solve it for good”, given our incomplete knowledge of what novel metabolites remain to be found out there.

Similar arguments, by the way, hold true for the field of shotgun metaproteomics: There, our database contains peptides from multiple organisms. Due to homologous peptide sequences in different organisms, incorrect hits are often not random. In particular, there is a good chance that if PEPTIDE is in your database, then so is PEPITDE. Worse, one can be in the database you search and one in your biological sample. I refrain from discussing further details; after all, we are talking about metabolites here.

But “hey!”, you might say, “Sebastian, you have published methods for FDR estimation yourself!” Well, that is true: Beyond the 2017 paper mentioned above, the confidence score of COSMIC is basically trying to estimate the Posterior Error Probability of a hit, which is a statistical measure again closely related to FDRs and FDR estimation. Well, you thought you got me there, did you? Yet, do you remember that above, I talked about FDR estimation methods that are accurate or useful? The thing is: FDR estimation methods from shotgun proteomics are enviably accurate, with precise estimates at FDR 1% and below. Yet, even if our FDR estimation methods in metabolomics can never be as accurate as those from shotgun proteomics, that does not mean they cannot be useful! We simply have to accept the fact that our numbers will not be as accurate, and that we have to interpret those numbers with a grain of salt.

The thing is, ballpark estimates can be helpful, too. Assume you have to jump, in complete darkness, into a natural pool of water below. I once did that, in a cave in New Zealand. It was called “cave rafting”, not sure if they still offer that type of organized madness. (Just checked, it does, highly recommended.) Back then, in almost complete darkness, our guide told me to jump; that I would fall for about a meter, and that the water below was two meters deep. I found this information to be extremely reassuring and helpful, but I doubt it was very accurate. I did not do exact measurements after the jump, but it is possible that I fell for only 85cm; possibly, the water was 3m deep. Yet, what I really, really wanted to know at that moment, was: Is it an 8m jump into a 30cm pond? I would say the guide did a good job. His estimates were useful.

I stress that my arguments should not be taken as an excuse to do a lazy evaluation. Au contraire! As a field, we must insist that all methods marketed as FDR estimation methods, are evaluated extensively as such. FDR estimations should be as precise as possible, and evaluations as described above are mandatory. Because only this can tell us how for we can trust the estimates. Because only this can convince us that estimates are indeed useful. Trying to come up with more accurate FDR estimates is a very, very challenging task, and trying to do so may be in vain. But remember: We choose to do these things, not because they are easy, but because they are hard.

I got the impression that few people in untargeted metabolomics and related fields are familiar with the concept of FDR and FDR estimation for annotation. This strikes me as strange, given the success of these concepts in other OMICS fields. If you want to learn more, I do not have the perfect link or video for you, but I tried my best to explain it in my videos about COSMIC, see here. If you have a better introduction, let me know!

Funny side note: If you were using a metascore, your FDR estimates from target-decoy competition would always be 0%. As noted, a method for FDR estimation must not return accurate or useful values, and a broken scoring can easily also break FDR estimation. Party on!